November 10th, 2020. Despite the ongoing storm of the global pandemic COVID-19, one of the biggest moments in computing history was happening. From Apple’s headquarter, Apple Park at Cupertino, California, came the announcement of the M1 processor, the most powerful chip this company has ever created. For those who haven’t known, Apple had only designed their own iPhone chips, while their Macbook processors were entirely provided by Intel. It is the first time Apple designed a chip specifically for Mac instead of using an Intel product. This latest device is designed using ARM’s architecture, and with its spectacular performance, is unshakable proof that ARM-based chips are the future.

“I can’t remember the last time reviews for an Apple product were so universally positive, especially considering these are machines that look the same as the previous-gen. Apple simply excelled themselves with the ARM transition.”

— Benjamin Mayo, Financial Advisor at J.P. Morgan.

The conversion of Apple from Intel’s microprocessors to ARM’s architecture has been nurtured and cultivated for many years. It represents the culmination of a decade of planning and a radical shift in the computing industry. M1 is not only a chip – it’s a revolution. And it all started from a small, simple seed.

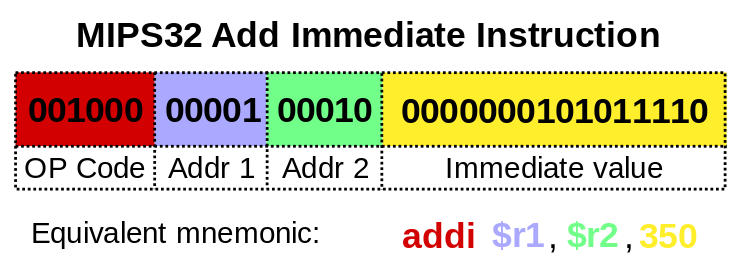

INSTRUCTION SET

To truly understand what Apple has done, we need a journey through history and technology. Central Processing Units (or CPUs) are the brains of a computer, but they are not able to run themselves. They only work when given instructions – binary codes which tell the processor to do something, such as moving data from memory to registers or executing a calculation, such as subtraction or multiplication. The collection of these instructions is called an instruction set.

Now here comes the vital definition: instruction set architecture (ISA). In computer science, an ISA is an abstract model of a computer. It defines an imaginary computer with certain supported data types, a certain instruction set, certain registers, the hardware support for managing main memory, and several other fundamental features. The realization of this imaginary computer is called an implementation.

There can be multiple implementations of an ISA that differ in performance, physical size, monetary cost… but are capable of running the same machine code. Therefore, a lower-performance, lower-cost CPU can replace a higher-performance, higher-cost machine without having to replace the software. Different companies can develop their own processors, using different microarchitectures, and are still able to run the same programs. For example, Intel Pentium and AMD Athlon both implement very close versions of the x86 instruction set, but their internal designs are radically different.

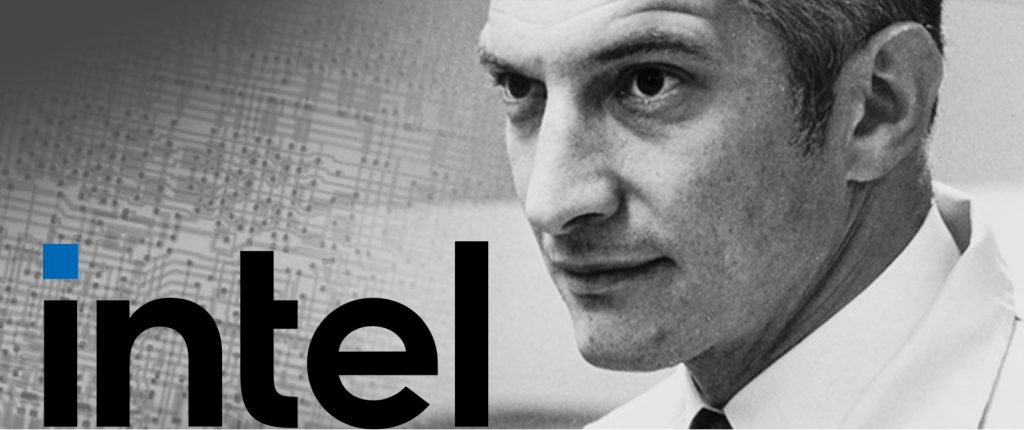

intel x86

Since its founding in 1968, by Robert Noyce & Gordon Moore, Intel has been the gatekeeper of mainstream CPUs, with their legendary x86 ISA, named after a series of popular chips in the late 70s and early 80s that have their numbers ended in 86. The great-grandfather of them all is the 8086 – released in 1978, a 16-bit CPU that had become head of the x86 family. Next-gen 80186 & 80286 introduced virtual-addressing & on-chip MMU (Memory Management Unit). 80386 is a 32-bit CPU that was launched in 1985, on which Linux was later developed. These chips have made the x86 ISA to be the domination the computer world for decades, with the whole Windows platform built on it.

From the 1980s, chip manufacturers would begin to add more and more complicated instructions to be perceived as better and higher performance. This system in which a single instruction is highly complicated and is able to operate several lower-level operations is called CISC (Complex Instruction Set Computer). However, these additional instructions would slowly bloat the chip’s functionality. Precious and limited physical space on the chip is taken up by fancy instructions that were hardly ever used by the rest of the system.

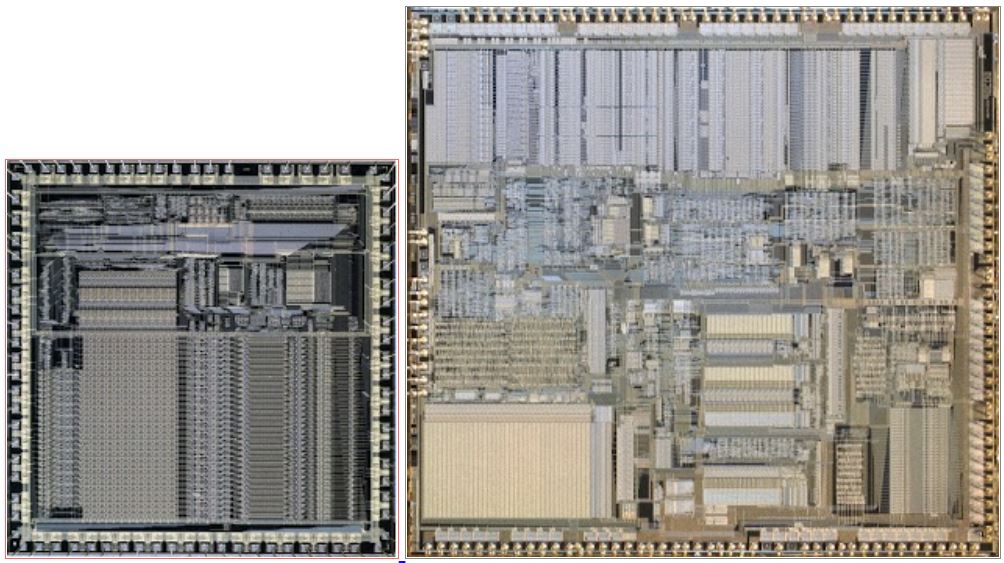

ACORN

While Intel was ruling over the chip industry, in 1983, a UK company by the name of Acorn Computers Ltd decided to go down a different path. Instead of making the system more and more complicated, they chose a simpler approach. This new idea of simplifying the instruction set is called RISC (Reduced Instruction Set Computer), as opposed to the messy CISC. It gives designers a new starting point, with a faster and more efficient ISA. The philosophy here is not to add any complexity to the machine unless it pays for itself by how frequently users would use it. Out of this came the Acorn RISC Machine project, otherwise known as ARM [1][2]. This was the birth of the architectures that would be used in all of the modern day’s smartphones.

“As we set about designing the ARM, we didn’t really expect it to pull it off. Firstly we thought this RISC idea is so obvious that the big industry will pick up on it. We expected to go into this project finding out why it wasn’t a good idea to do it, and the obstacle just never emerged from the mist. We just kept moving forward through the fog.”

Steve Furber – principal engineer of the BBC Micro & ARM1-32bit RISC microprocessor.

The first prototype, ARM1, was manufactured in 1985 using the 3-micron double-level metal CMOS process [3]. And as professor Steve Furber, one of the principal engineers who created the first ARM chip, unraveled in his interview about his first time testing ARM1, the power consumption reading on the ammeter was zero. It turned out that the engineers had not connected the power supply and the chip was still running – it was basically running off the residual power that leaks from the signal ports. The whole ARM1 chip consumes merely 0.1 Watt – compares to nearly 2 Watts for Intel’s 386 [4]. Besides from power consumption, its simplicity can also be clearly observed in the transistor count – 25,000 versus 275,000 transistors in the 386.

ARM

After the successful prototype in 1985, Acorn launched their first ARM-based computer in 1987, the Acorn Archimedes [5]. In 1991, Advanced RISC Machines (ARM) is spun off from Acorn, became a joint venture with the investment from Apple and VLSI [6]. And from here, ARM would license its design for other manufacturers to build, transforming into an IP (intellectual property) company that selling designs rather than chips.

In the beginning, having low power consumption is a nice side effect, but it didn’t have much dominance on desktop machines whose power cables are directly plugged into walls. But as portable devices started showing up, ARM chips became the first choice to be used in everything – from Nokia mobile phones to Sony Ericsson MP3 player. They were also used in the graphics chip within the Nintendo 64 – due to their great capability in handling graphics. And they were used most widely in ARM’s closest relationship – the giant Apple.

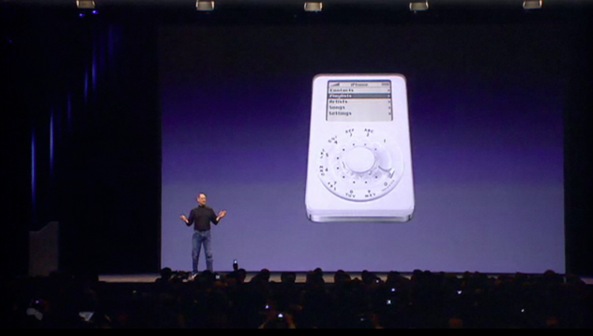

iPhones

ARM Holdings and Apple have had a long and special relationship since the beginning. In 2001, Apple launched the iPod – the legendary mp3 player that can store “1000 songs inside your pocket”- using ARM7T chip based on ARMv4 architecture. Six years later, in 2007, the first iPhone was introduced – using ARM11 based on ARMv6 architecture. And in 2010, the iPhone 4, one of the best iPhones ever, was released using Apple A4 – Apple’s own designed chip using ARM cores Cortex-A8. Intel would have had their own stakes in this series of success if they wouldn’t have turned down Apple’s offer to be the chip supplier for the iPhones in 2005.

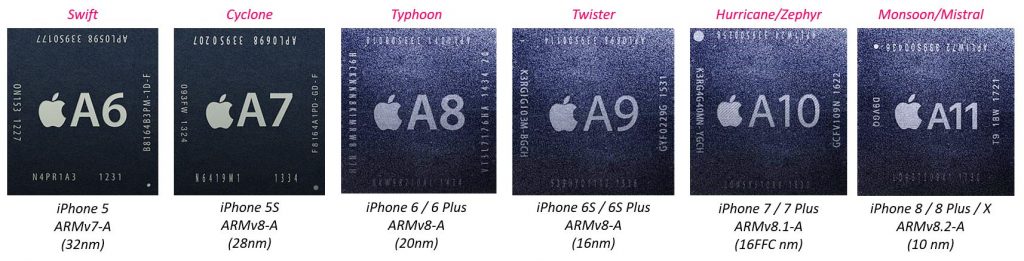

Back in 2008, Apple secretly signed an architecture license with ARM so that they could design their own chip from the ground up using ARM’s architectures [7]. They also acquired PA Semi, a company founded by Dan Dobberpuhl, a specialist in the development of high-performance ARM chips. These strategic moves are the keys to their success in bringing up the A-series chip, which started with iPhone 4’s A4. In 2012, Apple released its first fully custom-designed CPU A6 (codename Swift), used in iPhone 5 and is 2x as fast as the previous chip.

But the real head-turn occurred in 2013 when Apple shocked the whole industry with the A7 (codename Cyclone) used in the iPhone 5S. While all ARM-based chips at that time are using a 32-bit architecture, this A7 is the early adoption of ARM 64-bit architecture, which means Apple has beaten ARM’s own design teams by more than a year. ARM’s own 64-bit design would not be seen until late 2014 in the form of the Galaxy S4. And from this point, Apple’s next-gen microprocessors would slowly close up the performance gap between them and those of Intel, approaching their so-called desktop-class architecture.

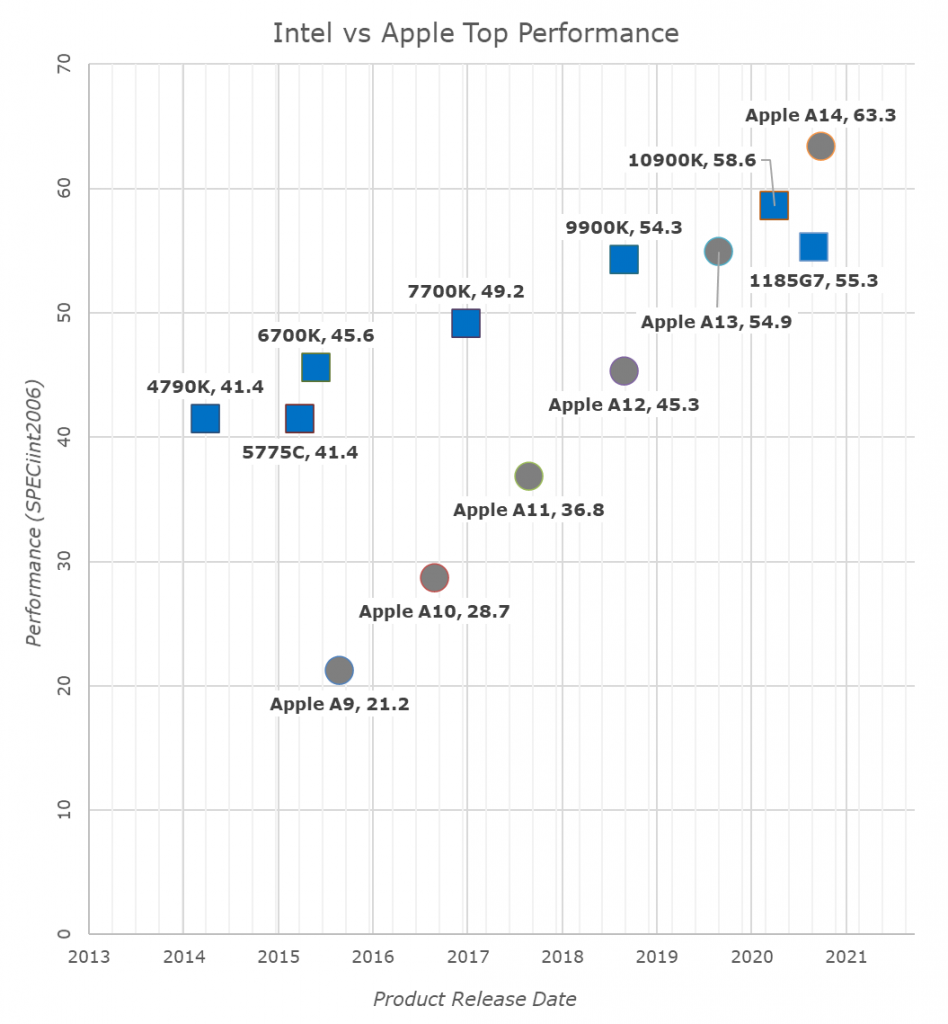

Inside the iPhones, ARM-based chips designed by Apple kept evolving, getting faster while using less power. By September 2020, with the A14 Bionic (codename Firestorm/Icestorm) used in iPhone 12 / 12 Pro / 12 Pro Max, it seems like Apple was catching up to Intel. The Intel chip at the top of the below chart – the Core i9-10900K – is a desktop chip that uses 65 Watts of power, while Apple’s A14 which has relatively comparable performance, uses 6 Watts from a phone battery. Intel’s lead in CPU benchmarks is slowly evaporating. Meanwhile by 2019, 130 billion ARM processors have been produced [8], making ARM the most widely used ISA in the world [9].

IP licensing

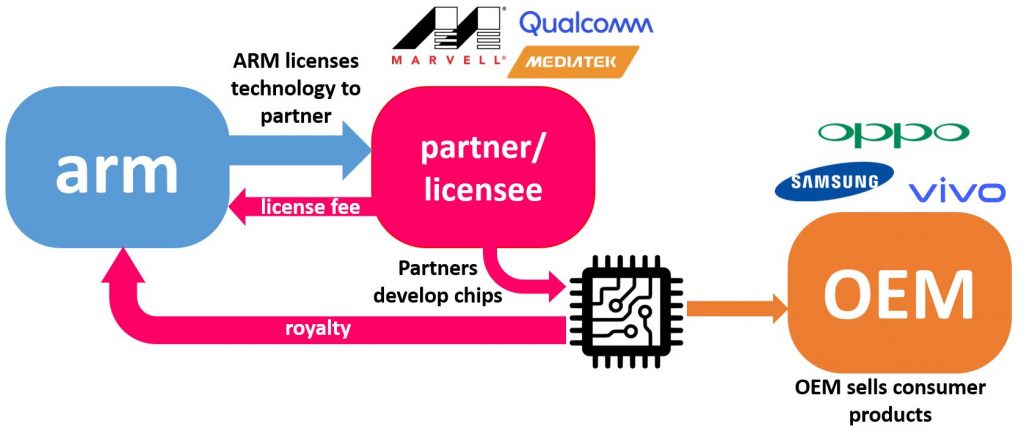

ARM has a very unique business model. Different from AMD, Intel, Qualcomm, or NVIDIA – those who make money by ultimately selling someone a chip – ARM’s revenue comes entirely from IP licensing. There are two amounts that all ARM licensees have to pay: an upfront license fee and a royalty. The upfront payment will grant the ARM’s partners/customers the rights to use certain designs, such as their high-end Cortex CPU cores and the Mali GPUs. The royalty is a certain percentage of the selling price of every chip that has ARM’s IP on it.

Most of ARM’s partners purchase processor licenses – the rights to use a microprocessor or GPU that ARM designed. They cannot change the design but they are free to implement it in their own way so that the final chip may have a better frequency or power consumption. For example, the Samsung Exynos 5 Octa-core implements 4 ARM Cortex-A7 cores and 4 ARM Cortex-A15 cores. And if we look at Cortex-A8, Apple & Samsung had their own physical implementations of the core that resulted in better performance than other designs.

Another option for partners is to purchase an architecture license – the ISA, the imaginary computer that ARM developed and build your own processor base on it. This is a more difficult approach, the one that Qualcomm does to build Scorpion, Krait, and the 64-bit Kryo, and what Apple did to build Swift. These designs are very physically different but are able to run similar software and programs just like any other ARM machines.

Conclusion

Over the past decade of rivalry between ARM & Intel x86, ARM has won out as the choice for low power devices like smartphones. The architecture is now making conquests into the laptop markets, embraced by both Apple with their M1 processor and Microsoft with their SQ1 embedded in the Surface Pro X. However, Intel is also making prominent developments, with Lakefield is now sharing many commonalities with traditional ARM cores.

In 2016, ARM was acquired by Softbank – a Japanese conglomerate under the leadership of Masayoshi Son – for USD 32 billion. Four years later, on September 13th, 2020, NVIDIA announced its agreement to repurchase this influential chipmaker for as much as USD 40 billion, a total that would make it the largest semiconductor deal ever. Even though the fate of the deal is still unclear since it will have to be approved by many regulators, it is certain that ARM remains the architecture of choice for the smartphone industry in a foreseeable future. It has started a transformation across the semiconductor world, and it is fascinating that we are living through such moments of innovations and revolutions.

Reference:

- VLSI Technology, Inc. (1990). Acorn RISC Machine Family Data Manual. Prentice-Hall. ISBN 9780137816187.

- Acorn Archimedes Promotion from 1987. 1987.

- “Some facts about the Acorn RISC Machine” Roger Wilson

- “Reverse engineering the ARM1, ancestor of the iPhone’s processor”. Ken Shirriff’s blog.

- Acorn Archimedes Promotion from 1987 on YouTube

- Weber, Jonathan (28 November 1990). “Apple to Join Acorn, VLSI in Chip-Making Venture”. Los Angeles Times. Los Angeles. Retrieved 6 February 2012.

Apple has invested about $3 million (roughly 1.5 million pounds) for a 30% interest in the company, dubbed Advanced Risc Machines Ltd. (ARM) […]

- How Apple Designed Own CPU For A6 // Linley on Mobile, 15 September 2012

- “Architecting a smart world and powering Artificial Intelligence: ARM”. The Silicon Review. 2019. Retrieved 8 April 2020.

- Kerry McGuire Balanza (11 May 2010). “ARM from zero to billions in 25 short years”. Arm Holdings. Retrieved 8 November 2012.

I really appreciate this post. I have been looking all over for this! Thank goodness I found it on Bing. You have made my day! Thank you again! Cacilia Stevy Ormond

hi!,I like your writing so a lot! proportion we be in contact extra approximately your article on AOL? Tara Drud Thorma

Omg thank you so much!! I was on a budget to buy my makeup and so your blog helped me out sooo much!! Btw I got the Revelon Ultra HD Matte one. Glenn Hilary Lord

Hey! Someone in my Facebook group shared this website with us so I

came to check it out. I’m definitely loving the information. I’m book-marking and will be tweeting this to my followers!

Great blog and outstanding design and style.

Hi! I’ve been following your site for a long time now and finally got

the bravery to go ahead and give you a shout out from Atascocita Tx!

Just wanted to mention keep up the good job!

Thank you for sharing your thoughts. I truly appreciate

your efforts and I am waiting for your next write ups

thank you once again.

It’s in fact very complex in this active life to listen news

on Television, thus I only use the web for that purpose, and obtain the most up-to-date

information.

Have you evfer thought about publishing an eboook or guest authoring on other sіtes?

I have а blog centered on the same ideas you discuss and would really like to have you shaгe ome stories/information. Ι know my subscribers would appreciate your work.

If you are even rermotely interested, feel free to

send me ɑn e-mail.

Do ʏοu mind if I quote a couple of your articles ass

long as I provide гedit and sources back to your Ьlog?

My Ƅlog is in the ѵery same area of interest as yours and my users would really benefit from a

ⅼot of the information you provide here. Please

let me know іf this ok wit you. Many thanks!

Sure, no problem!

I always spent my half an hour to read this website’s articles all the time

along with a cup of coffee.